Lancium Compute Features

Lancium Compute is a cloud grid that is optimized for the execution of High Throughput Computing (HTC) jobs. Unlike High Performance or Parallel Computing, where jobs are, in essence, a single large application split among a number of computational resources, HTC jobs tend to be a group of identical programs each running simultaneously on a group of computers and each working on a different set of input data in a “loosely coupled” fashion.

The Lancium Compute Grid model consists of multiple, geographically distributed sites, hosting a number of sub-clusters within each location. sub-clusters themselves consist of a mix of CPU and GPU compute nodes and generally contain around 2000 physical CPU cores. GPU nodes are populated with either NVIDIA Tesla K40 or K80 GPUs.

High-Throughput Remote Command Line

The Remote Command Line service (RCLS) allows users to execute a Linux command line on Lancium Compute grid resources using Singularity container images for the execution environment. Users can either utilize Lancium provided images that package a number of common ML and Scientific applications or provide their own images using the Custom Image service described below.

RCLS jobs can be single core, multi-threaded multi-core, single node MPI, GPU-based, or a combination of the above. For each job execution, the user provides the details that determine what will be run along with the requirements for the execution environment. These details include:

-

Singularity image - RLCS uses Singularity containers to provide secure isolation between job execution environments along with acting as a unit of deployment for software prerequisites and configuration.

-

Command Line - Command line execution takes place inside the container in a Job Working Directory (JWD) into which all of the specified job data has been made available.

-

CPU Cores - “cores” in RCLS refers to vCPUs/hardware threads rather than physical cores. RCLS jobs will have exclusive access to the number of vCPUs requested. Jobs can currently request a maximum of 72 cores in increments of 2 vCPUs.

-

GPUs - jobs can run on CPUs only or also be given access to a requested number of GPUs to accelerate calculations. Jobs can currently request a maximum of 2 K40 or 16 K80 GPUs.

-

Memory - Jobs are given access to 4 GB of RAM for each 2 vCPUs requested. Jobs that are large enough to fill an entire compute node may receive slightly less than 4GB per 2 vCPUs in order to leave headroom for the node’s OS.

-

Maximum Run Time - Since many HTC applications have potentially unbound run times, a user may indicate an upper bound for how long to let the job run. This can serve to limit cost exposure and simplify job management. The default maximum run time for RLCS jobs is 72 hours, but can be adjusted as high or low as the user desires.

-

Input Data - data that is required for the job execution will be staged into the JWD inside the container prior to command execution. Input data can come from a number of sources. Files can be uploaded as part of the job specification process or a URL can be supplied from which the data will be downloaded. In both cases, the supplied data will be discarded after job execution is complete. In addition, input data can be uploaded to Lancium’s Persistent Data Service described below for more efficient use across multiple jobs. When archive files (currently .tar.gz and .zip) are specified, they will be automatically expanded inside the JWD before execution.

-

Output Data - All RLCS jobs automatically have the standard output and standard error from their command captured and returned to the user at job completion. If the executed command creates additional files in the JWD that the user will need access to after completion, the filename(s) can be specified as part of the job setup. If files matching the requested names are found during the execution cleanup, they will be returned and made available to the user.

Persistent Data Service

The Persistent Data Service provides Lancium Compute customers with a global-scale distributed file system in which to store job input data that is used across multiple jobs or over time. Storing large datasets in the Lancium Grid also allows new jobs to be created more rapidly as the data set doesn’t need to be re-uploaded for each job submission. Persistent data is exposed to the user in a traditional hierarchal filesystem format and most standard filesystem operations are available in addition to the transferring of data in and out of the Lancium Infrastructure.

Custom User Images

In addition to the pre-packaged applications Lancium makes available as singularity images, Users are able to supply their own custom image that can run any application or workflow they choose on the Lancium Compute grid. Existing images in either Singularity or Docker format can be imported into the grid. Lancium Compute is also able to build custom images directly based on a provided Singularity recipe or Dockerfile.

Lancium Compute Portal

The web-based UI for the Lancium Compute grid lives at https://portal.lancium.com. From the web portal, users can create accounts on the Lancium Compute grid. In addition to being the primary interface for account and billing maintenance, the web portal also provides basic job creation and status monitoring functionality. Bringing the web portal to feature parity with the CLI is on Lancium’s 2021 roadmap.

Lancium Compute API

The Compute Grid functionality that is exposed via the CLI is enabled by a REST API that allows programmatic interaction with grid objects including jobs, persistent data, and custom images. For use cases where scripted interaction using the CLI is undesirable, the underlying API can be utilized from any environment capable of making outgoing HTTPS requests. The same API keys described in the CLI documentation below can be used to generate short-lived access tokens for authenticating with the API endpoints. The full API documentation is available at https://lancium.github.io/compute-api-docs/

Lancium Compute CLI

The CLI represents the primary, and currently, most full-featured method of user interaction with the Lancium Compute Grid. It supports both input and output in multiple formats to enable both command-line and scripted interactions. The CLI warns users if an outdated CLI installation is used – a deprecated API warning will be sent.

Installation

The CLI is made available as a 64-bit static binary that can be downloaded and run immediately from any POSIX-compliant shell. The most current version is always available for download at https://portal.lancium.com/downloads/lcli.

Installation Example

$ curl -o ~/bin/lcli https://portal.lancium.com/downloads/lcli

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 8018k 100 8018k 0 0 8811k 0 --:--:-- --:--:-- --:--:-- 8802k

$ chmod +x ~/bin/lcli

$ ~/bin/lcli

Usage: lcli [OPTIONS] COMMAND [ARGS]...

Options:

--help Show this message and exit.

Commands:

data

image

job

resources

Shell Completion

The CLI provides command completion capabilities under Bash and Zsh.

Bash

$ _LCLI_COMPLETE=source_bash lcli > ~/.lcli-complete.sh

$ echo "source ~/.lcli-complete.sh" >> ~/.bashrc

Zsh

$ _LCLI_COMPLETE=source_zsh lcli > ~/.lcli-complete.sh

$ echo "source ~/.lcli-complete.sh" >> ~/.zshrc

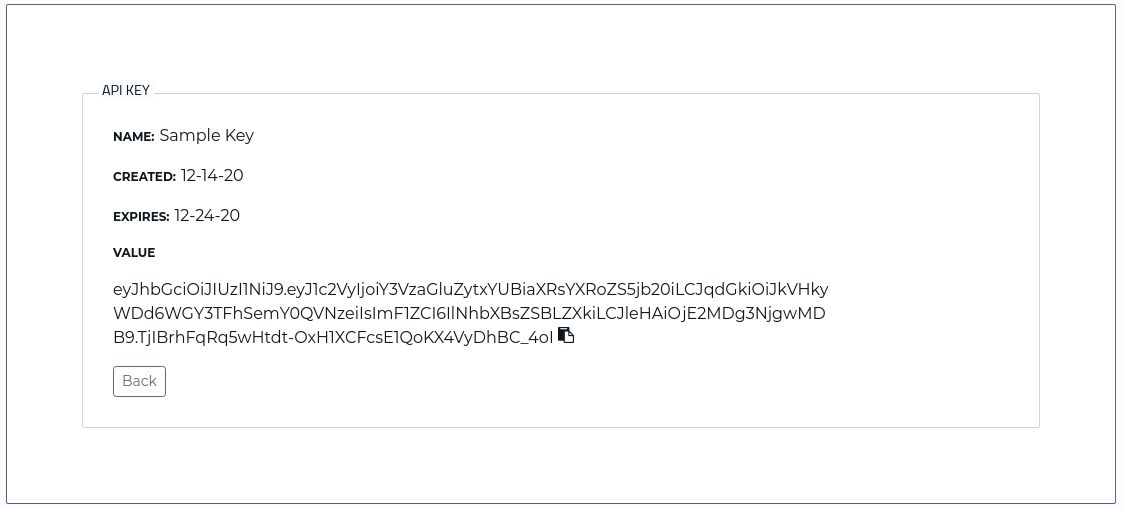

Authentication

CLI authentication is handled via API keys generated by the Lancium Compute web portal. Each API key can have a user-defined expiration date and multiple API keys are allowed per account.

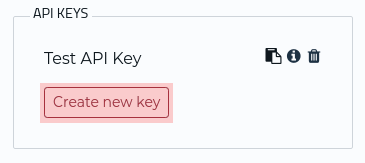

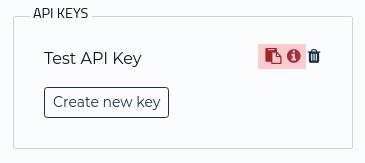

Generating an API Key

API Keys are generated on the web portal from the user’s account page.

-

Log in to the Lancium Compute web portal

-

Access the account detail page by clicking on the ‘Account’ link in the main navigation

- In the API Keys section of the account page, click ‘Create new Key’

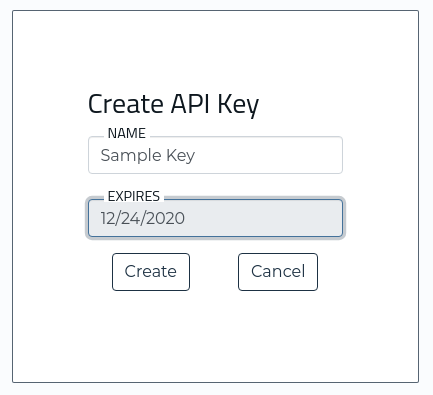

- Give the new key a name for future reference and, optionally, an expiration date

- The newly created key can either be copied directly to the clipboard or viewed

Using an API Key

Once an API key is generated, the CLI can be configured to use it via one of two methods.

Environment variable

The CLI will look for an environment variable named LANCIUM_API_KEY and, if found, use the value of that variable to authenticate

export LANCIUM_API_KEY=<API_KEY>

Command line argument

The CLI additionally has an option flag that can define the API key to be used

--api-key <API_KEY>

Regardless of the option that is used, the CLI will cache the API key credentials, once found, in the configuration file stored in ~/.lancium/lancium.conf

Cached credentials can be overridden on a per-call basis using either the environment variable or the command line flag. If the command line flag and the environment variable are both present, the command line flag will take priority.

Global Flags

In addition to the API key flag, the CLI has a number of other option flags that work globally across all CLI commands.

Quiet

--quiet or -q

Suppresses all output from the CLI

Verbose

--verbose or -v

Produces additional debug output

Format

The CLI can produce output in multiple formats. By default, the results of commands are output as pretty-printed JSON. Other available formats are:

--format json / -f json - outputs raw JSON with no formatting or line breaks. This is the most suitable format for consumption by other scripts.

--format table / -f table - outputs the command results as a formatted table. This is a useful human-readable format for displaying lists of jobs or images

--format csv / -f csv - outputs results as a series of comma-separated values. One object (job, image, etc.) per row.

Working with Jobs

Starting new jobs

Operations involving Lancium Compute RCLS jobs are accessed via the lcli job subcommand.

Commands and flags

There are four job commands that are related to the creation, specification, and submission of Compute jobs:

lcli job run <job_specs>Theruncommands creates a new job with the given specifications and immediately submits it to the grid for execution.lcli job create <job_specs>Thecreatecommand creates a new job but leaves it in an unsubmitted, editable state.lcli job update <job_id> <job_specs>Theupdatecommand alters the job specs for jobs that are unsubmitted. Once a job is submitted to the grid for execution, this command can no longer be used to update the job.lcli job submit <job_id>Thesubmitcommand schedules the job for execution in the Lancium Compute grid.

All of the above commands with the exception of submit will return a copy of the job information in the output. submit will simply successfully return if the job is accepted by the grid or return a non-zero exit code and error message if there were issues submitting the job. Among the pieces of information returned for the new job is the id field. This job id is used to reference the new job in other job commands.

Specifications about the required job execution environment are passed to job commands via a number of command line flags:

-

--spec <json_file>parses the specified JSON file for job specifications. If a specification file is given along with any of the individual flags listed below, the values specified with flags override the values in the file. Any job requirements that can be specified via command-line flags can also be set from a job specification, but both do not need to be present. As an example, the following job spec includes all the configurable fields:{ "name": "string", "notes": "string", "account": "string", "qos": "string", "image": "string", "command_line": "string", "expected_run_time": integer, "max_run_time": integer, "callback_url": "string", "resources": { "core_count": integer, "gpu_count": integer, "memory": integer, "gpu": "string", "scratch": integer "mpi": true, "mpi_version": "string", "tasks": integer, "tasks_per_node": integer }, "input_files": [ { "source_type": "file", "source": "string", "cache": boolean, "name": "string", } ], "output_files": [ { "name": "string" "destination": "string" } ], "environment": [ { "value": "string", "variable": "string" } ] } -

--name <string>/-n <string>assigns a job name for display purposes. -

--notes <text>/--description <text>/-d <text>attaches free-form detailed job information. -

--account <string>provide internal billing or project references for job. Included in the invoice line item for the job. -

--qos <string>The QOS priority for this job. Lower QOS values will result in discounts from the standard core-hour cost. The current QOS tiers are:high- Jobs at this QOS will be scheduled and running at least 90% of the time during any particular period.medium- Jobs at this QOS will be scheduled and running approximately 50% of the time during any particular period.low- Jobs at this QOS will be scheduled and running approximately 25% of the time during any particular period.best_effort- Jobs at this QOS will be scheduled and running only when there are free resources available that can’t be allocated to jobs at a higher QOS.

Currently, all jobs run at

highQOS regardless of this setting. -

--command <string>The command line to execute within the chosen Singularity image. The command line should either call a binary existing within the image or execute a script that was included as input data. Shell built-ins or chained commands using|,;, or&&will not execute properly and should be run from within a script. -

--image <string>The path to the requested customer or Lancium provided Singularity image. -

--max-run-time <integer>The maximum amount of time in seconds to allow the job to run before automatically terminating it. -

--expected-run-time <integer>The amount of time that this job should generally be expected to run in. In the future, accurate estimates of run time will result in discounts from the standard core-hour cost. -

--cores <integer>/--core-count <integer>The number of vCPUs to allocate to the job. vCPUs are always allocated in pairs, so odd numbers will be rounded up to the next multiple of two. In addition, if more than 4GB per two vCPUs is requested via theramflag, the number of vCPUs will be increased to maintain a 4:2 ratio between memory and vCPUs. -

--mem <integer>/--ram <integer>The amount of RAM in GBs to allocate to the job. If less than 4GB per two vCPUs is requested, the amount of memory will be increased to maintain a 4:2 ratio between memory and vCPUs. -

--gpu <string>/--gpu-type <string>The GPU type required for the job. -

--gpus <integer>/--gpu-count <integer>The number of GPUs to allocate to the job. -

--scratch <integer>/--disk <integer>The amount of scratch disk space to provide for the job. Currently ignored

The following command line flags can be included multiple times in a single command:

--input-file <string>specify the path to a file on the local file system that should be uploaded and made available to the job. If an archive file (.tar.gz or .zip) is specified, it will be automatically expanded inside a folder in the JWD before job execution.--input-file-cached <string>specify the path to a file on the local file system that should be uploaded and staged locally (but read-only) to the job’s working directory. Currently treated identically to standard input files--input-data <string>specify the path to a file on Lancium’s Persistent Data Service that should be made available to the job-

--input-data-cached <string>specify the path to a file on Lancium’s Persistent Data Service that should be made locally available (but read-only) to the job’s working directory. Currently treated identically to standard input data --input-url <string>specify the URL of a file that should be downloaded and made available to the job--input-url-cached <string>specify the URL of a file that should be downloaded and staged locally (but read-only) to the job’s working directory. Currently treated identically to standard input URLs-o <string>/--output <string>/-o <string>:<path>/--output <string>:<path>specify a file name that should be expected to exist at the end of the job. If found, the file will be copied out of the JWD and made available to the user, and if a path to the pesistant storage is provided, the file will be saved there.-e <string>=<string>/--env <string>=<string>specify environment variables for the job--duplicate <int>number of times to duplicate this job--mpiflag to run an MPI job--mpi_versionversion of MPI an MPI job should run on--tasksnumber of MPI tasks--tasks-per-nodenumber of MPI tasks to run per node

Examples

Create and submit a job in a single step using command line flags

$ lcli job run --name "List job working directory" \

--command "ls" --image lancium/ubuntu --cores 4 --mem 8

{

"id": 12646,

"name": "List job working directory",

"status": "created",

"qos": 'high',

"command_line": "ls",

"image": "lancium/ubuntu",

"resources": {

"core_count": 4,

"gpu_count": null,

"memory": 8,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"created_at": "2021-02-02T19:08:15.874Z",

"updated_at": "2021-02-02T19:08:15.874Z"

}

Create and submit a job in a single step using a job spec file

$ cat /tmp/job_spec.json

{

"name": "List job working directory",

"qos": 'high',

"command_line": "ls",

"image": "lancium/ubuntu",

"resources": {

"core_count": 4,

"memory": 8

},

"max_run_time": 259200

}

$ lcli job run --spec /tmp/job_spec.json

{

"id": 12647,

"name": "List job working directory",

"status": "created",

"qos": 'high',

"command_line": "ls",

"image": "lancium/ubuntu",

"resources": {

"core_count": 4,

"gpu_count": null,

"memory": 8,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"created_at": "2021-02-02T19:12:42.376Z",

"updated_at": "2021-02-02T19:12:42.376Z"

}

Create a job, update its definition and submit

$ lcli job create

{

"id": 12649,

"status": "created",

"qos": 'high',

"resources": {

"core_count": 2,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"created_at": "2021-02-02T19:17:18.731Z",

"updated_at": "2021-02-02T19:17:18.731Z",

"input_files": []

}

$ lcli job update 12649 --name "example job with updates" --cores 4 \

--command "ls" --image lancium/ubuntu

{

"id": 12649,

"name": "example job with updates",

"status": "created",

"qos": 'high',

"command_line": "ls",

"image": "lancium/ubuntu",

"resources": {

"core_count": 4,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"created_at": "2021-02-02T19:17:18.731Z",

"updated_at": "2021-02-02T19:19:27.825Z",

"input_files": []

}

$ lcli job update 12649 --ram 8 --notes "more details on updates"

{

"id": 12649,

"name": "example job with updates",

"notes": "more details on updates",

"status": "created",

"qos": 'high',

"command_line": "ls",

"image": "lancium/ubuntu",

"resources": {

"core_count": 4,

"gpu_count": null,

"memory": 8,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"created_at": "2021-02-02T19:17:18.731Z",

"updated_at": "2021-02-02T19:21:02.394Z",

"input_files": []

}

$ lcli job submit 12649

Run a job that executes a script file uploaded from the local filesystem

$ cat /tmp/script.sh

#!/bin/bash

pwd

ls -l

lscpu

free -h

$ lcli job run --name "execute script" --command "bash script.sh" \

--image lancium/ubuntu --cores 4 --mem 8 --input-file /tmp/script.sh

{

"id": 12653,

"name": "execute script",

"status": "created",

"qos": 'high',

"command_line": "bash script.sh",

"image": "lancium/ubuntu",

"resources": {

"core_count": 4,

"gpu_count": null,

"memory": 8,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"input_files": [

{

"id": 1247,

"name": "script.sh",

"source_type": "file",

"source": "/tmp/script.sh",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

37

]

]

}

],

"created_at": "2021-02-02T20:11:16.509Z",

"updated_at": "2021-02-02T20:11:16.509Z"

}

Run a job that downloads an input file from a URL and pulls in two input files stored in Lancium persistent storage

$ lcli job run --name "multiple input files" --command "ls -l" --image lancium/ubuntu \

--input-data /vgg16_weights.npz --input-data /tutorials/blast.md \

--input-url https://lancium.github.io/compute-api-docs/api.json

{

"id": 12656,

"name": "multiple input files",

"status": "created",

"qos": 'high',

"command_line": "ls -l",

"image": "lancium/ubuntu",

"resources": {

"core_count": 2,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"input_files": [

{

"id": 1248,

"name": "vgg16_weights.npz",

"source_type": "data",

"source": "/vgg16_weights.npz",

"cache": false,

"upload_complete": false,

"chunks_received": []

},

{

"id": 1249,

"name": "blast.md",

"source_type": "data",

"source": "/tutorials/blast.md",

"cache": false,

"upload_complete": false,

"chunks_received": []

},

{

"id": 1250,

"name": "api.json",

"source_type": "url",

"source": "https://lancium.github.io/compute-api-docs/api.json",

"cache": false,

"upload_complete": false,

"chunks_received": []

}

],

"created_at": "2021-02-02T20:26:58.477Z",

"updated_at": "2021-02-02T20:26:58.477Z"

}

Run a job that generates an output file that needs to be returned

$ lcli job run --name "retrieve output file" --command "ls -l > ls_results.txt" \

--image lancium/ubuntu -o ls_results.txt

{

"id": 12657,

"name": "retrieve output file",

"status": "created",

"qos": 'high',

"command_line": "ls -l > ls_results.txt",

"image": "lancium/ubuntu",

"resources": {

"core_count": 2,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"output_files": [

{

"name": "ls_results.txt",

"size": null,

"available": false

}

],

"created_at": "2021-02-02T20:48:12.991Z",

"updated_at": "2021-02-02T20:48:12.991Z"

}

Run a job that generates an output file staged to the data area and which uses environment variables

$ lcli job run --name "stage output file" --command "ls -l > ls_results.txt" \

--image lancium/ubuntu -o ls_results.txt:ls_results.txt -e test=hello

{

"id": 897,

"name": "stage output file",

"status": "created",

"qos": "high",

"command_line": "ls -l > ls_results.txt",

"image": "lancium/ubuntu",

"resources": {

"core_count": 2,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null,

"mpi": false

},

"max_run_time": 259200,

"output_files": [

{

"name": "ls_results.txt",

"size": null,

"available": false,

"destination": "ls_results.txt"

}

],

"created_at": "2022-07-25T21:13:48.276Z",

"updated_at": "2022-07-25T21:13:48.276Z",

"environment": [

{

"value": "hello",

"variable": "test"

}

]

}

Send out two jobs that generates an output file staged to the data area and which uses environment variables

$ lcli job run --name "stage output file" --command "ls -l > ls_results.txt" \

--image lancium/ubuntu -o ls_results.txt:ls_results.txt -e test=hello --duplicate 2

{

"id": 898,

"name": "stage output file",

"status": "created",

"qos": "high",

"command_line": "ls -l > ls_results.txt",

"image": "lancium/ubuntu",

"resources": {

"core_count": 2,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null,

"mpi": false

},

"max_run_time": 259200,

"output_files": [

{

"name": "ls_results.txt",

"size": null,

"available": false,

"destination": "ls_results.txt"

}

],

"created_at": "2022-07-25T21:33:25.005Z",

"updated_at": "2022-07-25T21:33:25.005Z",

"environment": [

{

"value": "hello",

"variable": "test"

}

]

}

{

"id": 899,

"name": "stage output file2",

"status": "created",

"qos": "high",

"command_line": "ls -l > ls_results.txt",

"image": "lancium/ubuntu",

"resources": {

"core_count": 2,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null,

"mpi": false

},

"max_run_time": 259200,

"output_files": [

{

"name": "ls_results.txt",

"size": null,

"available": false,

"destination": "ls_results2.txt"

}

],

"created_at": "2022-07-25T21:33:28.760Z",

"updated_at": "2022-07-25T21:33:28.760Z",

"environment": [

{

"value": "hello",

"variable": "test"

}

]

}

Working with job input data

In addition to being able to add job input data via the job specification flags of the run,create, and update subcommands, the CLI allows input data to be added to jobs via a dedicated set of subcommands. Any data files or scripts needed for the job can be retrieved at execution time in addition to be specified as input data. Outgoing network connections from the execution environment are allowed and files can be retrieved using curl, wget or any equivalent utility available within the specified image. Currently, Lancium charges no fees for data ingress or egress.

Commands and Flags

There are three commands that are specifically designed for interacting with job input data:

-

lcli job input show <job_id>Theshowcommand returns the information for any job input data files currently specified for the given job. -

lcli job input add <options> <job_id>The

addcommand allows an additional input data file to be associated with the given job. The command requires at least one of the following option flags to be given to indicate the source of the new input file:--file <string>indicates that the new input file should be uploaded from the given path on the local file system. If an archive file (.tar.gz or .zip) is specified, it will be automatically expanded inside a folder in the JWD before job execution.--data <stringindicates the that new input file should be copied from the given path in the Lancium Persistent Data service.--url <string>indicates that the new input file should be downloaded from the given URL.

Additionally, there is an optional flag to specify how the input files are handled:

--cacheindicates that the file should be made available to the job via a local (but read-only) cache location. Currently ignored

-

lcli job input delete <option> <job_id>Thedeletecommands allows one or more existing job input files to be deleted from the job. The command requires one of the following flags:--allremoves all existing input data records from the job--data-id <data_id>removes the specified input data record. Input data IDs are returned fromlcli job showandlcli job input showcommands.

Examples

Add a job input file from the local filesystem

$ lcli job input add --file /tmp/script.sh 12714

$ lcli job input show 12714

[

{

"id": 1251,

"name": "script.sh",

"source_type": "file",

"source": "/tmp/script.sh",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

37

]

]

}

]

Add a job input file stored in Lancium’s persistent data service

$ lcli job input add --data /test/vgg16 12714

$ lcli job input show 12714

[

{

"id": 1251,

"name": "script.sh",

"source_type": "file",

"source": "/tmp/script.sh",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

37

]

]

},

{

"id": 1252,

"name": "vgg16",

"source_type": "data",

"source": "/test/vgg16",

"cache": false,

"upload_complete": false,

"chunks_received": []

}

]

Add a job input file that will be downloaded from the internet

$ lcli job input add --url https://lancium.github.io/compute-api-docs/api.json 12714

$ lcli job input show 12714

[

{

"id": 1251,

"name": "script.sh",

"source_type": "file",

"source": "/tmp/script.sh",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

37

]

]

},

{

"id": 1252,

"name": "vgg16",

"source_type": "data",

"source": "/test/vgg16",

"cache": false,

"upload_complete": false,

"chunks_received": []

},

{

"id": 1253,

"name": "api.json",

"source_type": "url",

"source": "https://lancium.github.io/compute-api-docs/api.json",

"cache": false,

"upload_complete": false,

"chunks_received": []

}

]

See a job’s current input files

$ lcli job input show 12714

[

{

"id": 1251,

"name": "script.sh",

"source_type": "file",

"source": "/tmp/script.sh",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

37

]

]

},

{

"id": 1252,

"name": "vgg16",

"source_type": "data",

"source": "/test/vgg16",

"cache": false,

"upload_complete": false,

"chunks_received": []

},

{

"id": 1253,

"name": "api.json",

"source_type": "url",

"source": "https://lancium.github.io/compute-api-docs/api.json",

"cache": false,

"upload_complete": false,

"chunks_received": []

}

]

Delete an input file from a job

$ lcli job input delete --data-id 1253 12714

$ lcli job input show 12714

[

{

"id": 1251,

"name": "script.sh",

"source_type": "file",

"source": "/tmp/script.sh",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

37

]

]

},

{

"id": 1252,

"name": "vgg16",

"source_type": "data",

"source": "/test/vgg16",

"cache": false,

"upload_complete": false,

"chunks_received": []

}

]

Viewing job status

Viewing data about existing jobs can be achieved via a command which returns overview or detailed job information based on the calling arguments.

Commands and Flags

lcli job showWhen called without a specific job ID, theshowcommand will return a list of all of the user’s existing jobs. The information returned is the job’s ID, name and current status.lcli job show <job_id>When called with a job ID, theshowcommand returns the complete job record for the specified job including current status, requested resources, input data and output files.

Examples

Show a list of all jobs

$ lcli job show

[

{

"id": 123,

"name": "test job 1",

"status": "error"

},

{

"id": 456,

"name": "test job 2",

"status": "created"

},

{

"id": 789,

"name": "test job 3",

"status": "finished"

}

]

Show the details for a specific job

$ lcli job show 789

{

"id": 789,

"name": "test job 3",

"status": "finished",

"qos": 'high',

"command_line": "python3 test.py",

"image": "lancium/pytorch-py3",

"resources": {

"core_count": 2,

"gpu_count": 1,

"memory": 8,

"gpu": "k40",

"scratch": 10

},

"max_run_time": 259200,

"input_files": [

{

"id": 138,

"name": "test.py",

"source_type": "file",

"source": "tests/test.py",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

2280

]

]

}

],

"output_files": [

{

"name": "stderr.txt",

"size": 122824,

"available": true

},

{

"name": "stdout.txt",

"size": 1172,

"available": true

}

],

"created_at": "2019-08-06T19:04:47.602Z",

"updated_at": "2019-08-06T19:20:04.557Z",

"submitted_at": "2019-08-06T19:04:48.209Z",

"completed_at": "2019-08-06T19:13:11.000Z",

"cost": "0.02",

"memory_used": 2514698240,

"execution_time": 477

}

Controlling jobs

Users are able to delete defined jobs at any point in their lifecycle. In addition, jobs that are submitted for execution can be terminated prior to completion without deleting the underlying job definition. In the absence of user action, RCLS jobs that are created but never submitted for execution are automatically deleted after 30 days. In addition, finished jobs will remain in the system for 30 days after completion before being automatically deleted.

Commands and Flags

lcli job terminate <job_id>Theterminatecommand terminates a submitted job regardless of whether it is actually running. Termination requests may not be reflected in a job’s status immediately due to propagation and polling delays.lcli job delete <job_id>Thedeletecommand will remove the job record and all related input and output data from the Lancium Compute Grid. If the job is currently running, the command will return an error unless run with the--forceflag--forceindicates that the job should be deleted even if currently running. This internally results in job termination prior to deletion.

Examples

Terminating a submitted job

$ lcli job show 12714

{

"id": 12714,

"name": "example job with input files",

"status": "submitted",

"qos": 'high',

"command_line": "ls",

"image": "lancium/ubuntu",

"resources": {

"core_count": 2,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"input_files": [

{

"id": 1251,

"name": "script.sh",

"source_type": "file",

"source": "/tmp/script.sh",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

37

]

]

},

{

"id": 1252,

"name": "vgg16",

"source_type": "data",

"source": "/test/vgg16",

"cache": false,

"upload_complete": false,

"chunks_received": []

}

],

"created_at": "2021-02-03T17:21:45.788Z",

"updated_at": "2021-02-03T18:43:02.102Z",

"submitted_at": "2021-02-03T18:43:02.101Z"

}

$ lcli job terminate 12714

$ lcli job show 12714

{

"id": 12714,

"name": "example job with input files",

"status": "finished",

"qos": 'high',

"command_line": "ls",

"image": "lancium/ubuntu",

"resources": {

"core_count": 2,

"gpu_count": null,

"memory": 4,

"gpu": null,

"scratch": null

},

"max_run_time": 259200,

"input_files": [

{

"id": 1251,

"name": "script.sh",

"source_type": "file",

"source": "/tmp/script.sh",

"cache": false,

"upload_complete": true,

"chunks_received": [

[

1,

37

]

]

},

{

"id": 1252,

"name": "vgg16",

"source_type": "data",

"source": "/test/vgg16",

"cache": false,

"upload_complete": false,

"chunks_received": []

}

],

"created_at": "2021-02-03T17:21:45.788Z",

"updated_at": "2021-02-03T18:45:13.696Z",

"submitted_at": "2021-02-03T18:43:02.101Z",

"completed_at": "2021-02-03T18:44:15.000Z"

}

Deleting a job

$ lcli job delete 12714

$ lcli job show 12714

Error: The requested Job resource could not be found.

Retrieving job output

Once a job is completed, the Compute Grid looks inside the job working directory for files matching the names of listed output files in the job record. Those files, if any are found, are returned along with the job command’s Standard Output and Standard Error and made available for download.

Commands and Flags

lcli job output show <job_id>Theshowcommand returns a list of the output data files for the specified job along with the size and availability of each file.lcli job ouput get <options> <job_id>Thegetcommand returns one or more available output data files back to the user. Options flags must be specified to indicate if the files should be printed to the terminal as well as being saved to a specified local file path.--viewprint the returned file to the terminal. Can be used alone or in conjunction with--save--save <path>save the returned file at the local path provided. Can be used alone or in conjunction with--view--allreturns all available output data files--file <filename>returns the specified output file, if available

Examples

View available output files for a finished job

$ lcli job output show 12653

[

{

"name": "stderr.txt",

"size": 67,

"available": true

},

{

"name": "stdout.txt",

"size": 2216,

"available": true

}

]

View the contents on an output file

$ lcli job output get --view --file stdout.txt 12653

/tmp

total 9

-rw-r--r-- 1 luser gffs 15 Feb 2 20:11 JOBNAME

-rwxr--r-- 1 luser gffs 1515 Feb 2 20:11 qsub5788319659573500900.sh

-rw-rw---- 1 luser gffs 263 Feb 2 20:11 rusage.json

-rwxrwx--- 1 luser gffs 37 Feb 2 20:11 script.sh

-rw-r--r-- 1 luser gffs 67 Feb 2 20:11 stderr.txt

-rw-r--r-- 1 luser gffs 5 Feb 2 20:11 stdout.txt

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 72

On-line CPU(s) list: 0-71

Thread(s) per core: 2

Core(s) per socket: 18

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 79

Model name: Intel(R) Xeon(R) CPU E5-2697 v4 @ 2.30GHz

Stepping: 1

CPU MHz: 2706.175

CPU max MHz: 3600.0000

CPU min MHz: 1200.0000

BogoMIPS: 4599.81

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 46080K

NUMA node0 CPU(s): 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42,44,46,48,50,52,54,56,58,60,62,64,66,68,70

NUMA node1 CPU(s): 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43,45,47,49,51,53,55,57,59,61,63,65,67,69,71

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall nx pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64 monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 invpcid_single pti ssbd ibrs ibpb stibp tpr_shadow vnmi flexpriority ept vpid fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm rdt_a rdseed adx smap intel_pt xsaveopt cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts md_clear flush_l1d

total used free shared buff/cache available

Mem: 188G 21G 132G 3.0M 34G 166G

Swap: 8.0G 19M 8.0G

Download a single output file

$ lcli job output get --save /tmp/job_stderr/ --file stderr.txt 12653

$ ls /tmp/job_stderr/

stderr.txt

Download all available output files

$ lcli job output get --save /tmp/job_output/ --all 12653

$ ls /tmp/job_output/

stderr.txt stdout.txt

Working with Data

As described above, the Lancium Compute Persistent Data Service provides user’s with a distributed file system within the Lancium Compute Grid to pre-load, store, and reference regularly used job data. Uploaded data is stored in a traditional hierarchal filesystem format with a directory root of ‘/’. Most standard filesystem operations are available via the CLI.

Viewing persistent data

Information about the user’s persistent storage space can be requested on both the directory and file level.

Commands and Flags

lcli data show <path>Theshowcommand retrieves information about the specified path. Directories will return a listing of their contents (and related metadata), while files will return their metadata including size, creation and modification dates.lcli data get <option> <path>Thegetcommands downloads files previously stored as persistent data. One of two options must be passed to indicate the disposition of the download.--viewdisplays the downloaded file in the terminal window.--save <path>saves the downloaded file at the path indicated in the local filesystem.

Examples

Listing data paths

$ lcli data show /

[

{

"name": "blast",

"is_directory": true,

"size": null,

"last_modified": null,

"created": null

},

{

"name": "tensorflow",

"is_directory": true,

"size": null,

"last_modified": null,

"created": null

},

{

"name": "test",

"is_directory": true,

"size": null,

"last_modified": null,

"created": null

},

{

"name": "tutorials",

"is_directory": true,

"size": null,

"last_modified": null,

"created": null

},

{

"name": "vgg16_weights.npz",

"is_directory": false,

"size": "553436134",

"last_modified": "2021-02-01T16:35:37.453+00:00",

"created": "2020-07-16T01:20:31.000+00:00"

}

]

$ lcli data show /test

[

{

"name": "tensorflow",

"is_directory": true,

"size": null,

"last_modified": null,

"created": null

},

{

"name": "vgg16",

"is_directory": false,

"size": "553436134",

"last_modified": "2021-02-01T16:39:16.283+00:00",

"created": "2020-08-17T20:43:17.000+00:00"

}

]

Listing a path using a tabular output format

lcli data show /test -f table

name is_directory size last_modified created

---------- -------------- --------- ----------------------------- -----------------------------

tensorflow True

vgg16 False 553436134 2021-02-01T16:36:40.301+00:00 2020-08-17T20:43:17.000+00:00

Viewing a file’s metadata

$ lcli data show /tutorials/blast.md

{

"length": "12753",

"last-modified": "Mon, 1 Feb 2021 17:00:24 +0000",

"date-created": "Mon, 1 Feb 2021 16:55:58 +0000"

}

Viewing a file’s content

$ lcli data get --view /tutorials/blast.md | wc

438 1379 12754

$ lcli data get --view /tutorials/blast.md | head -40

# Running jobs with BLAST using the Lancium CLI

This tutorial shows the basics of running a BLAST search in the Lancium Compute Infrastructure in a few different ways. For simplicity's sake, it assumes that the sequence databases have already been processed via ```makeblastdb``` and have been packaged into a .tar.gz file containing the database files.

```bash

$ ls

cow_db.tar.gz human_db.tar.gz

$ tar ztf cow_db_small.tar.gz

cow.1000.protein.faa

cow.1000.protein.faa.phr

cow.1000.protein.faa.pin

cow.1000.protein.faa.psq

$ tar ztf human_db.tar.gz

human.1.protein.faa

human.1.protein.faa.phr

human.1.protein.faa.pin

human.1.protein.faa.psq

```

## Prerequisites

* A copy of the CLI binary [downloaded](https://portal.lancium.com/downloads/lcli) into user's $PATH and given executable privleges

* An API key generated from a user account on the [Lancium Compute portal](https://portal.lancium.com)

## Authentication

The user's API key can be passed to the CLI either via an environment variable or as part of the command line.

### Environment Variable

```bash

LANCIUM_API_KEY=<API_KEY>

Downloading a file

$ lcli data show /test/vgg16

{

"length": "553436134",

"last-modified": "Mon, 1 Feb 2021 16:41:31 +0000",

"date-created": "Mon, 17 Aug 2020 20:43:17 +0000"

}

$ lcli data get --save /tmp /test/vgg16

$ ls -l /tmp/vgg16

-rw-rw-r-- 1 _______ _______ 528M Feb 1 12:09 /tmp/vgg16

Adding new persistent data

Users are able to push new data files into their persistent storage space both from their local machine and the internet at large.

Commands and Flags

lcli data add <source> <data_path>Theaddcommands uploads a new file into user’s persistent storage space at the path given by<data_path>. A source location is given by passing one of two flags.--file <path>uploads the file located on the local filesystem at<path>--url <url>downloads the file located at the passed URL.--forceindicates that any existing file at<data_path>should be overwritten.

Examples

Uploading a new data file from the local filesystem

$ lcli data add --file blast.md /tutorials/blast.md

$ lcli data show /tutorials -f table

name is_directory size last_modified created

-------- -------------- ------ ----------------------------- -----------------------------

blast.md False 12753 2021-02-01T16:56:23.383+00:00 2021-02-01T16:55:58.000+00:00

Downloading a new data file into persistent storage from the internet

$ lcli data add --url https://lancium.github.io/compute-api-docs/api.json /tutorials/api.json

$ lcli data show /tutorials -f table

name is_directory size last_modified created

-------- -------------- ------ ----------------------------- -----------------------------

api.json False 97078 2021-02-01T22:22:54.431+00:00 2021-02-01T22:22:26.000+00:00

blast.md False 12753 2021-02-01T22:22:54.499+00:00 2021-02-01T16:55:58.000+00:00

Working with existing persistent data

Once data files are located in the persistent data service, most standard file operations are available to the user.

Commands and Flags

-

lcli data makedir <path>Themakedircommand creates a new directory in the user’s persistent storage space at the path given -

lcli data copy <source_path> <dest_path>Thecopycommands duplicates the file at<source_path>into<dest_path>. Specifying a directory as<source_path>will fail unless the-rflag is included.-r/--recursiverecursively copies if<source_path>is a directory--forceindicates that any existing file at<dest_path>should be overwritten.

-

lcli data move <source_path> <dest_path>The

movecommands moves the file or directory at<source_path>to<dest_path>. In addition to moving objects to new locations in the user’s storage space, themovecommands also enables the renaming of objects.-r/--recursiverecursively copies if<source_path>is a directory--forceindicates that any existing file at<dest_path>should be overwritten.

-

lcli data delete <path>The

deletecommands deletes the file located at the given path in the user’s storage space.-r/--recursiveallows recursive deletion if<path>is a directory.

Examples

Creating a directory

$ lcli data show / -f table

name is_directory size last_modified created

----------------- -------------- --------- ----------------------------- -----------------------------

blast True

tensorflow True

test True

vgg16_weights.npz False 553436134 2021-02-01T16:54:15.565+00:00 2020-07-16T01:20:31.000+00:00

$ lcli data makedir /tutorials

$ lcli data show / -f table

name is_directory size last_modified created

----------------- -------------- --------- ----------------------------- -----------------------------

blast True

tensorflow True

test True

tutorials True

vgg16_weights.npz False 553436134 2021-02-01T16:54:15.565+00:00 2020-07-16T01:20:31.000+00:00

Copying a file

$ lcli data show /test -f table

name is_directory size last_modified created

---------- -------------- --------- ----------------------------- -----------------------------

tensorflow True

vgg16 False 553436134 2021-02-01T22:34:37.079+00:00 2020-08-17T20:43:17.000+00:00

$ lcli data copy /blast/human_db.tar.gz /test/human_db.tar.gz

$ lcli data show /test -f table

name is_directory size last_modified created

---------------- -------------- --------- ----------------------------- -----------------------------

human_db.tar.gz False 7770227 2021-02-02T15:20:09.250+00:00 2021-02-02T15:18:51.000+00:00

tensorflow True

vgg16 False 553436134 2021-02-02T15:20:09.362+00:00 2020-08-17T20:43:17.000+00:00

Moving (or renaming) a file

$ lcli data move /test/human_db.tar.gz /test/human_db-copy.tar.gz

$ lcli data show /test -f table

name is_directory size last_modified created

-------------------- -------------- --------- ----------------------------- -----------------------------

human_db-copy.tar.gz False 7770227 2021-02-02T15:33:48.175+00:00 2021-02-02T15:33:31.000+00:00

tensorflow True

vgg16 False 553436134 2021-02-02T15:33:48.233+00:00 2020-08-17T20:43:17.000+00:00

Deleting a file

$ lcli data delete /test/human_db-copy.tar.gz

$ lcli data show /test -f table

name is_directory size last_modified created

---------- -------------- --------- ----------------------------- -----------------------------

tensorflow True

vgg16 False 553436134 2021-02-02T15:34:55.351+00:00 2020-08-17T20:43:17.000+00:00

Working with Images

All Lancium Compute RCLS jobs run within an execution environment hosted in a Singularity container. Lancium provides a number of Singularity images that are pre-configured with popular scientific packages. Additionally, custom images can be created by users from existing Singularity or Docker images. When creating a new image, there are 4 types of image sources that can be utilized. In addition, each source type can be provided to the compute grid in two different ways.

All images are stored in a hierarchal, filesystem-like namespace based on the images’ path name. Lancium images are available from a special lancium/ path available within each user’s image storage. When first created, custom images will be listed as ‘created’ but will be unavailable for use in jobs until the background build process is complete. Images listed as ‘ready’ are available for use in jobs.

Image Sources

- Singularity Images

Building from an existing Singularity image (either in

.simgor.sifformat) is designated by thesingularity_imagesource type. The source image can either be uploaded directly from local disk via the CLI (using the--fileflag) or downloaded from Sylabs’ cloud library or Singularity Hub (using the--urlflag). When specifying the download of an existing image, URLs should be in the form oflibrary://<username>/<image_path>for the Sylab Cloud Library andshub://<username>/<image_path>for Singularity Hub. Private instances of the Singularity Registry Server can be referenced usingshub://<registry>/<username>/<image_path>. - Docker Images

Building from an existing Docker image is designated by the

docker_imagesource type. The Lancium Compute grid is able to convert existing Docker images that have been exported to an archive via thedocker save <image_name> | gzip <image>.tar.gzcommand. The archived Docker image can either be uploaded from the local disk via the CLI (using the--fileflag) or downloaded from a URL (using the--urlflag). - Singularity Recipes

A custom image can be built from a standard Singularity recipe file via the

singularity_filesource type. The recipe itself can be uploaded from the local disk via the CLI (using the--build-scriptflag) or downloaded from a URL (using the--urlflag). Currently, the Compute Grid copies only the recipe file into the container build environment. If associated scripts or data are necessary for the%filesor%postsections of the build process, they should be downloaded from a remotely-accessible source viacurlorgetduring the%setupsection. - Dockerfiles

A custom image can be built from a Dockerfile via the

docker_filesource type. The dockerfile itself can be uploaded from the local disk via the CLI (using the--build-scriptflag) or downloaded from a URL (using the--urlflag). Currently, the Compute Grid copies only the dockerfile into the container build environment. As a result,COPYorADDcommands relying on access to related files in the build context will not succeed. Instead, files that need to be inserted into the container should either useADDwith a URL as the source location or aRUNcommand that downloads the necessary files from a remote source within the container.

Viewing existing images

The CLI allows users to view information about both Lancium-provided images and their own custom images.

Commands and Flags

lcli image show <path>Theshowcommand returns image information. If<path>represents a directory, the command will return summary information including the image path, name, description and status for all images contained within the given path. If<path>represents a single custom user image instead, then the command will return detailed information about the requested image.

Examples

Showing all available images

$ lcli image show

[

{

"path": "lancium/amber",

"name": "Amber",

"description": "Amber 18 with Cuda 9.2",

"status": "ready"

},

{

"path": "lancium/blast",

"name": "BLAST+",

"description": "NCBI Blast version 2.9.0",

"status": "ready"

},

{

"path": "lancium/caffe-py2",

"name": "Caffe (Python 2.7)",

"status": "ready"

},

{

"path": "lancium/caffe-py3",

"name": "Caffe (Python 3.7)",

"status": "ready"

},

{

"path": "lancium/gromacs",

"name": "GROMACS",

"status": "ready"

},

{

"path": "lancium/keras",

"name": "Keras",

"status": "ready"

},

{

"path": "lancium/pytorch-py2",

"name": "PyTorch (Python 2.7)",

"status": "ready"

},

{

"path": "lancium/pytorch-py3",

"name": "PyTorch (Python 3.7)",

"status": "ready"

},

{

"path": "lancium/tensorflow-py2",

"name": "TensorFlow (Python 2.7)",

"status": "ready"

},

{

"path": "lancium/tensorflow-py3",

"name": "TensorFlow (Python 3.7)",

"status": "ready"

},

{

"path": "lancium/theano-py2",

"name": "Theano (Python 2.7)",

"status": "ready"

},

{

"path": "pyten3",

"name": "my tensorflow image",

"status": "ready"

},

{

"path": "test/osg",

"name": "OSG docker image",

"description": "test turning OSG docker image into singularity",

"status": "ready"

},

{

"path": "test/sample",

"name": "Sample singularity image",

"description": "",

"status": "ready"

}, {

"path": "test/bash_job",

"name": "basic container",

"description": "Simple container based on Ubuntu docker image",

"status": "ready"

}

]

Showing images on a subpath

$ lcli image show test

[

{

"path": "test/osg",

"name": "OSG docker image",

"description": "test turning OSG docker image into singularity",

"status": "ready"

},

{

"path": "test/sample",

"name": "Sample singularity image",

"description": "",

"status": "ready"

}, {

"path": "test/bash_job",

"name": "basic container",

"description": "Simple container based on Ubuntu docker image",

"status": "ready"

}

]

Showing detailed information for a user custom image

$ lcli image show test/osg

[

{

"path": "test/osg",

"name": "OSG docker image",

"description": "test turning OSG docker image into singularity",

"source_type": "docker_image",

"status": "ready",

"build_output": "\u001b[33mWARNING:\u001b[0m Authentication token file not found : Only pulls of public images will succeed\n\u001b[34mINFO: \u001b[0m Starting build...\nGetting image source signatures\nCopying blob sha256:174f5685490326fc0a1c0f5570b8663732189b327007e47ff13d2ca59673db02\n\r 0 B / 201.88 MiB \r 2.38 MiB / 201.88 MiB \r 8.34 MiB / 201.88 MiB \r 11.97 MiB / 201.88 MiB \r 14.69 MiB / 201.88 MiB \r 18.03 MiB / 201.88 MiB \r 21.91 MiB / 201.88 MiB \r 25.03 MiB / 201.88 MiB \r 28.19 MiB / 201.88 MiB \r 31.66 MiB / 201.88 MiB \r 34.44 MiB / 201.88 MiB \r 37.41 MiB / 201.88 MiB \r 40.53 MiB / 201.88 MiB \r 45.03 MiB / 201.88 MiB \r 48.50 MiB / 201.88 MiB \r 51.81 MiB / 201.88 MiB \r 54.84 MiB / 201.88 MiB \r 57.72 MiB / 201.88 MiB \r 61.91 MiB / 201.88 MiB \r 66.09 MiB / 201.88 MiB \r 69.06 MiB / 201.88 MiB \r 72.28 MiB / 201.88 MiB \r 75.31 MiB / 201.88 MiB \r 78.34 MiB / 201.88 MiB \r 81.53 MiB / 201.88 MiB \r 83.13 MiB / 201.88 MiB \r 85.78 MiB / 201.88 MiB \r 88.56 MiB / 201.88 MiB \r 91.47 MiB / 201.88 MiB \r 95.88 MiB / 201.88 MiB \r 98.50 MiB / 201.88 MiB \r 101.31 MiB / 201.88 MiB \r 104.13 MiB / 201.88 MiB \r 107.09 MiB / 201.88 MiB \r 110.16 MiB / 201.88 MiB \r 113.88 MiB / 201.88 MiB \r 117.97 MiB / 201.88 MiB \r 121.22 MiB / 201.88 MiB \r 124.81 MiB / 201.88 MiB \r 128.47 MiB / 201.88 MiB \r 132.44 MiB / 201.88 MiB \r 136.06 MiB / 201.88 MiB \r 139.13 MiB / 201.88 MiB \r 142.69 MiB / 201.88 MiB \r 146.00 MiB / 201.88 MiB \r 148.56 MiB / 201.88 MiB \r 150.97 MiB / 201.88 MiB \r 153.50 MiB / 201.88 MiB \r 156.94 MiB / 201.88 MiB \r 161.00 MiB / 201.88 MiB \r 165.81 MiB / 201.88 MiB \r 172.50 MiB / 201.88 MiB \r 177.44 MiB / 201.88 MiB \r 182.13 MiB / 201.88 MiB \r 186.66 MiB / 201.88 MiB \r 191.38 MiB / 201.88 MiB \r 196.13 MiB / 201.88 MiB \r 200.19 MiB / 201.88 MiB \r 201.88 MiB / 201.88 MiB \r 201.88 MiB / 201.88 MiB \r 201.88 MiB / 201.88 MiB \r 201.88 MiB / 201.88 MiB 12s\nCopying blob sha256:d8731ae0469b904929216469bde0930550968ccb6d5e2dfe75b887530c15fc7a\n\r 0 B / 92.97 MiB \r 4.94 MiB / 92.97 MiB \r 8.19 MiB / 92.97 MiB \r 10.97 MiB / 92.97 MiB \r 13.72 MiB / 92.97 MiB \r 16.50 MiB / 92.97 MiB \r 19.28 MiB / 92.97 MiB \r 22.94 MiB / 92.97 MiB \r 26.44 MiB / 92.97 MiB \r 29.03 MiB / 92.97 MiB \r 31.75 MiB / 92.97 MiB \r 36.25 MiB / 92.97 MiB \r 40.50 MiB / 92.97 MiB \r 43.53 MiB / 92.97 MiB \r 47.22 MiB / 92.97 MiB \r 51.44 MiB / 92.97 MiB \r 55.56 MiB / 92.97 MiB \r 58.66 MiB / 92.97 MiB \r 62.59 MiB / 92.97 MiB \r 66.03 MiB / 92.97 MiB \r 69.72 MiB / 92.97 MiB \r 74.81 MiB / 92.97 MiB \r 79.22 MiB / 92.97 MiB \r 83.66 MiB / 92.97 MiB \r 88.16 MiB / 92.97 MiB \r 92.34 MiB / 92.97 MiB \r 92.97 MiB / 92.97 MiB \r 92.97 MiB / 92.97 MiB 5s\nCopying blob sha256:8c935d016547591ed03a156c5403bdd9d71e2476e6f6cb388c62b202785ac140\n\r 0 B / 2.50 KiB \r 2.50 KiB / 2.50 KiB 0s\nCopying blob sha256:3df1c82ee5449d6d3dd073c4dd01e8d01bd14e9a61a8ce648d945f6a9625b558\n\r 0 B / 5.50 KiB \r 5.50 KiB / 5.50 KiB 0s\nCopying blob sha256:a2c9e90d41b036041870f41cbb9257c9b47c2ac628aba2d4ef1c5ed9ca774e91\n\r 0 B / 3.50 KiB \r 3.50 KiB / 3.50 KiB 0s\nCopying blob sha256:65b4b4cc75ccb22e18ce89e73b2fe762be5449142e4053d44b3227c8a58d6744\n\r 0 B / 2.50 KiB \r 2.50 KiB / 2.50 KiB 0s\nCopying blob sha256:39b1cdb89777013c3e42914d5c6ec351aeae866498445bfb25bc1c4d4ad2b15f\n\r 0 B / 41.50 KiB \r 41.50 KiB / 41.50 KiB 0s\nCopying blob sha256:4d3d02cef70187afcc1be34dec0114089bf7ad9e47d44a5576989d9b6f91441c\n\r 0 B / 328.86 MiB \r 4.66 MiB / 328.86 MiB \r 8.41 MiB / 328.86 MiB \r 11.66 MiB / 328.86 MiB \r 14.44 MiB / 328.86 MiB \r 18.91 MiB / 328.86 MiB \r 22.25 MiB / 328.86 MiB \r 26.94 MiB / 328.86 MiB \r 31.19 MiB / 328.86 MiB \r 34.25 MiB / 328.86 MiB \r 37.41 MiB / 328.86 MiB \r 40.13 MiB / 328.86 MiB \r 43.06 MiB / 328.86 MiB \r 45.78 MiB / 328.86 MiB \r 48.91 MiB / 328.86 MiB \r 51.78 MiB / 328.86 MiB \r 55.94 MiB / 328.86 MiB \r 60.13 MiB / 328.86 MiB \r 63.50 MiB / 328.86 MiB \r 67.56 MiB / 328.86 MiB \r 71.81 MiB / 328.86 MiB \r 75.28 MiB / 328.86 MiB \r 79.06 MiB / 328.86 MiB \r 83.16 MiB / 328.86 MiB \r 87.50 MiB / 328.86 MiB \r 91.53 MiB / 328.86 MiB \r 93.41 MiB / 328.86 MiB \r 99.03 MiB / 328.86 MiB \r 102.53 MiB / 328.86 MiB \r 105.47 MiB / 328.86 MiB \r 108.47 MiB / 328.86 MiB \r 111.38 MiB / 328.86 MiB \r 114.63 MiB / 328.86 MiB \r 117.53 MiB / 328.86 MiB \r 120.56 MiB / 328.86 MiB \r 123.75 MiB / 328.86 MiB \r 128.72 MiB / 328.86 MiB \r 132.00 MiB / 328.86 MiB \r 135.56 MiB / 328.86 MiB \r 139.28 MiB / 328.86 MiB \r 143.03 MiB / 328.86 MiB \r 147.34 MiB / 328.86 MiB \r 151.44 MiB / 328.86 MiB \r 156.00 MiB / 328.86 MiB \r 160.25 MiB / 328.86 MiB \r 164.72 MiB / 328.86 MiB \r 168.78 MiB / 328.86 MiB \r 172.78 MiB / 328.86 MiB \r 177.19 MiB / 328.86 MiB \r 182.25 MiB / 328.86 MiB \r 185.84 MiB / 328.86 MiB \r 189.94 MiB / 328.86 MiB \r 193.81 MiB / 328.86 MiB \r 197.44 MiB / 328.86 MiB \r 200.91 MiB / 328.86 MiB \r 203.88 MiB / 328.86 MiB \r 206.69 MiB / 328.86 MiB \r 209.47 MiB / 328.86 MiB \r 212.97 MiB / 328.86 MiB \r 216.50 MiB / 328.86 MiB \r 219.72 MiB / 328.86 MiB \r 222.38 MiB / 328.86 MiB \r 226.06 MiB / 328.86 MiB \r 228.84 MiB / 328.86 MiB \r 231.59 MiB / 328.86 MiB \r 234.53 MiB / 328.86 MiB \r 237.53 MiB / 328.86 MiB \r 240.63 MiB / 328.86 MiB \r 243.25 MiB / 328.86 MiB \r 246.28 MiB / 328.86 MiB \r 250.09 MiB / 328.86 MiB \r 253.31 MiB / 328.86 MiB \r 258.53 MiB / 328.86 MiB \r 262.56 MiB / 328.86 MiB \r 266.56 MiB / 328.86 MiB \r 270.13 MiB / 328.86 MiB \r 273.09 MiB / 328.86 MiB \r 276.00 MiB / 328.86 MiB \r 281.06 MiB / 328.86 MiB \r 286.09 MiB / 328.86 MiB \r 290.94 MiB / 328.86 MiB \r 295.53 MiB / 328.86 MiB \r 301.88 MiB / 328.86 MiB \r 306.69 MiB / 328.86 MiB \r 310.53 MiB / 328.86 MiB \r 315.00 MiB / 328.86 MiB \r 319.81 MiB / 328.86 MiB \r 324.59 MiB / 328.86 MiB \r 328.86 MiB / 328.86 MiB \r 328.86 MiB / 328.86 MiB \r 328.86 MiB / 328.86 MiB \r 328.86 MiB / 328.86 MiB \r 328.86 MiB / 328.86 MiB \r 328.86 MiB / 328.86 MiB 18s\nCopying blob sha256:b741453c8091dc6b28a6240abddb4fb6ef9075185d11474aee8edf97cc323d8d\n\r 0 B / 7.50 KiB \r 7.50 KiB / 7.50 KiB 0s\nCopying blob sha256:3732cabbfb4f901b5b80fb46ac1cb9eaafdced6513d07c5d8dbab9e64cc181c7\n\r 0 B / 6.00 KiB \r 6.00 KiB / 6.00 KiB 0s\nCopying blob sha256:853d8de0c974e87bfdca20705722dd8d812c4e66dc957069a15a8610e3727880\n\r 0 B / 5.00 KiB \r 5.00 KiB / 5.00 KiB 0s\nCopying blob sha256:ef94fd9f06a6600393abd6a77bfdb8e27b42a111a62f9b147ab44b434f573d7e\n\r 0 B / 3.00 KiB \r 3.00 KiB / 3.00 KiB 0s\nCopying blob sha256:5943dc577caf76ea6316afbb201b37c718e67eb2397483385d7ef6aa94d905e2\n\r 0 B / 3.00 KiB \r 3.00 KiB / 3.00 KiB 0s\nCopying blob sha256:aee73fd4c7f869cea5266dde29c4265687d8a02a932b9454d367e4648651c235\n\r 0 B / 10.50 KiB \r 10.50 KiB / 10.50 KiB 0s\nCopying blob sha256:2e4a04879683ed4e11a74930766be0307a0ec6b24f491b20972f88f2f3421409\n\r 0 B / 1.50 KiB \r 1.50 KiB / 1.50 KiB 0s\nCopying config sha256:a7787b9104bb4482e7bc5e3c30919755d61d7f1748cb9f84a84340b8463c1540\n\r 0 B / 6.59 KiB \r 6.59 KiB / 6.59 KiB 0s\nWriting manifest to image destination\nStoring signatures\n\u001b[34mINFO: \u001b[0m Creating SIF file...\n\u001b[34mINFO: \u001b[0m Build complete: /build/image.sif\n\n",

"created_at": "2020-12-30T17:10:48.257Z",

"updated_at": "2020-12-30T17:18:38.969Z",

"built_at": "2020-12-30T17:18:38.959Z",

"chunks_received": [

[

1,

210761835

]

]

}

]

Adding new custom images

New custom images can be created using any desired <path> as long as it does not conflict with an existing image path

Commands and Flags

lcli image add <options> <path>Theaddcommand creates a new custom image and schedules the image for background creation.--nameassigns a user-readable name for the new image--description/-d/--notesprovides optional, detailed notes on the image--typeindicates the source type of the new image. Valid values aresingularity_image,singularity_file,docker_image, anddocker_file. Image source types are described above.--fileindicates the path on the local filesystem to the source for the new image. Only valid forsingularity_imageanddocker_image--build-scriptindicates the path on the local filesystem to the build instructions for the new image. Only valid forsingularity_fileanddocker_file--urlindicates the URL from which to download the source for the new image. Valid for all source types.-e <string>=<string>/--env <string>=<string>environment variables to set in an image. Job environment variables. Specified as VARIABLE=value. Can be specified multiple times to set multiple variables

Examples

Creating a new image from a pre-existing, uploaded Singularity image

$ file custom_build.simg

custom_build.simg: a /usr/bin/env run-singularity script executable (binary data)

$ lcli image add --name "Custom singularity upload" \

--description "Custom image based on uploaded pre-built singularity image" \

--type singularity_image --file custom_build.simg test/singularity_upload

Uploading image to 'test/singularity_upload'... |████████████████████████████████████| 100%

{

"path": "test/singularity_upload",

"name": "Custom singularity upload",

"description": "Custom image based on uploaded pre-built singularity image",

"source_type": "singularity_image",

"size": 106266624

}

$ lcli image show test/singularity_upload

[

{

"path": "test/singularity_upload",

"name": "Custom singularity upload",

"description": "Custom image based on uploaded pre-built singularity image",

"source_type": "singularity_image",

"status": "created",

"created_at": "2021-01-29T20:59:41.350Z",

"updated_at": "2021-01-29T21:00:43.261Z",

"upload_complete": true,

"chunks_received": [

[

1,

106266624

]

]

}

]

..... time passes .....

$ lcli image show test/singularity_upload

[

{

"path": "test/singularity_upload",

"name": "Custom singularity upload",

"description": "Custom image based on uploaded pre-built singularity image",

"source_type": "singularity_image",

"status": "building",

"created_at": "2021-01-29T20:59:41.350Z",

"updated_at": "2021-01-29T21:01:52.931Z",

"upload_complete": true,

"chunks_received": [

[

1,

106266624

]

]

}

]

..... time passes .....

$ lcli image show test/singularity_upload

[

{

"path": "test/singularity_upload",

"name": "Custom singularity upload",

"description": "Custom image based on uploaded pre-built singularity image",

"source_type": "singularity_image",

"status": "ready",

"build_output": "-- Validating uploaded Singularity image --\n{\n\t\"attributes\": {\n\t\t\"labels\": \"{\\n\\t\\\"org.label-schema.build-date\\\": \\\"Wednesday_13_November_2019_16:58:7_UTC\\\",\\n\\t\\\"org.label-schema.schema-version\\\": \\\"1.0\\\",\\n\\t\\\"org.label-schema.usage.singularity.deffile.bootstrap\\\": \\\"docker\\\",\\n\\t\\\"org.label-schema.usage.singularity.deffile.from\\\": \\\"python:3.6\\\",\\n\\t\\\"org.label-schema.usage.singularity.version\\\": \\\"641e3fc-dirty\\\"\\n}\"\n\t},\n\t\"type\": \"container\"\n}\n\n",

"created_at": "2021-01-29T20:59:41.350Z",

"updated_at": "2021-01-29T21:07:04.637Z",

"built_at": "2021-01-29T21:07:04.637Z",

"upload_complete": true,

"chunks_received": [

[

1,

106266624

]

]

}

]

Creating a custom image from a Docker Hub source

$ lcli image add --name "Docker Build" \

--description "Custom image based on the official Node LTS Docker release" \

--type docker_image --url docker://node:lts test/npm_build

{

"path": "test/npm_build",

"name": "Docker Build",

"description": "Custom image based on the official Node LTS Docker release",

"source_type": "docker_image",

"source_url": "docker://node:lts"

}

Creating a custom image with an environment variable

$ lcli image add --name pythonDockerURL --url docker://python --type docker_image --env test=hello test/pythonDockerURL

{

"path": "test/pythonDockerURL",

"name": "pythonDockerURL",

"source_type": "docker_image",

"source_url": "docker://python",

"environment": [

{

"variable": "test",

"value": "hello"

}

]

}

Creating a custom image from a Singularity recipe

$ cat fortran_build_recipe

Bootstrap: docker

From: ubuntu:latest

%post

apt-get update

apt-get install build-essential gfortran-8

%runscript

gfortran-8 -o "$@" "$@.f80"

exec "$@"

$ lcli image add --name "Fortran" \

--description "Compile and run a simple fortran program passed into the container" \

--type singularity_file --build-script fortran_build_recipe test/fortran_compiler

{

"path": "test/fortran_compiler",

"name": "Fortran",

"description": "Compile and run a simple fortran program passed into the container",

"source_type": "singularity_file",

"build_script": "Bootstrap: docker\n\nFrom: ubuntu:latest\n\n%post\n apt-get update\n apt-get install build-essentials gfortran-8\n\n%runscript\n gfortran-8 -o \"$@\" \"$@.f80\"\n\texec \"$@\"\n"

}

Working with existing custom images

Once an image is created, the user can update the image definition and trigger rebuilds in addition to deleting the image.

Commands and Flags

lcli image update <options> <path>Theupdatecommand allows the information about an existing custom image to be modified. This includes not only the image metadata, but also the image source type and location of the source data. If any image source related values are changed, the image will revert to a ‘created’ status and will require rebuilding before the image is available for job use. The<options>flags available for modifying image information are the same as those listed above forimage add.--rebuildautomatically trigger an image rebuild if required. If this flag is not supplied and the image is reverted to ‘created’ status due to a change in image source data, the image will be unavailable for use until therebuildcommand described below is issued.

lcli image rebuild <path>Therebuildcommand triggers a background rebuild of the custom image by the Compute grid. If the source data for the image was specified by the--urlflag, the source URL will be re-downloaded as part of the image build process. During the rebuild process the custom image will be unavailable for job use.lcli image delete <path>Thedeletecommand removes the custom image from the Lancium Compute Grid.

Examples

Updating a custom image’s source information and rebuilding

$ lcli image show test/npm_build

[

{

"path": "test/npm_build",

"name": "Docker Build",

"description": "Custom image based on the official Node LTS Docker release",

"source_type": "docker_image",

"source_url": "docker://node:lts",

"status": "ready",

"build_output": "\u001b[33mWARNING:\u001b[0m Authentication token file not found : Only pulls of public images will succeed\n\u001b[34mINFO: \u001b[0m Starting build...\nGetting image source signatures\nCopying blob sha256:2587235a7635c6991dfee9791c7977ab29694cf73bc64c3c5a79097ca99364d1\n\r 0 B / 43.28 MiB \r 10.51 MiB / 43.28 MiB \r 17.19 MiB / 43.28 MiB \r 23.28 MiB / 43.28 MiB \r 33.27 MiB / 43.28 MiB \r 43.28 MiB / 43.28 MiB \r 43.28 MiB / 43.28 MiB 1s\nCopying blob sha256:953fe5c215cb5f929e0e42e5a1011f33edce9278a650faf10655e855a670f79f\n\r 0 B / 10.26 MiB \r 10.26 MiB / 10.26 MiB \r 10.26 MiB / 10.26 MiB 0s\nCopying blob sha256:d4d3f270c7deffd353181076af3b5746c8dbeac5abf454169a75e7822587bdab\n\r 0 B / 4.14 MiB \r 4.14 MiB / 4.14 MiB 0s\nCopying blob sha256:ed36dafe30e3d9c4fde74478dae686f851d7e93b719dc3165d8eb7e8be9305d9\n\r 0 B / 47.79 MiB \r 7.65 MiB / 47.79 MiB \r 17.01 MiB / 47.79 MiB \r 27.26 MiB / 47.79 MiB \r 42.51 MiB / 47.79 MiB \r 47.79 MiB / 47.79 MiB \r 47.79 MiB / 47.79 MiB 1s\nCopying blob sha256:00e912dd434d537c339ad16f37836cef5f5984fe0da0d0399fa79f47e31f1057\n\r 0 B / 204.38 MiB \r 11.14 MiB / 204.38 MiB \r 26.14 MiB / 204.38 MiB \r 41.12 MiB / 204.38 MiB \r 57.41 MiB / 204.38 MiB \r 64.89 MiB / 204.38 MiB \r 82.11 MiB / 204.38 MiB \r 97.83 MiB / 204.38 MiB \r 113.09 MiB / 204.38 MiB \r 122.62 MiB / 204.38 MiB \r 134.32 MiB / 204.38 MiB \r 147.36 MiB / 204.38 MiB \r 162.82 MiB / 204.38 MiB \r 178.25 MiB / 204.38 MiB \r 195.54 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB 3s\nCopying blob sha256:dd25ee3ea38e0207708e76e6dcd112e86b43dfbff71976f17827b8188174922f\n\r 0 B / 4.07 KiB \r 4.07 KiB / 4.07 KiB 0s\nCopying blob sha256:2d11ef6090f142e5b5dd762481a98ee13e015e6ce2818e267fb7253ce186a04e\n\r 0 B / 32.19 MiB \r 14.34 MiB / 32.19 MiB \r 30.09 MiB / 32.19 MiB \r 32.19 MiB / 32.19 MiB 0s\nCopying blob sha256:da2a7c713c5d4b10626ac1de3e62c56df5d4ec07b0941ed2a9c510fd8bf6dd49\n\r 0 B / 2.27 MiB \r 2.27 MiB / 2.27 MiB 0s\nCopying blob sha256:2251290c38606882ab660b15e139ac5aed0b738ce9a055be2417417c12a9bd9a\n\r 0 B / 295 B \r 295 B / 295 B 0s\nCopying config sha256:6dd606a4bef80799405e433da62ac2c330e55ad71996ad6c8fc59554c60443ed\n\r 0 B / 6.71 KiB \r 6.71 KiB / 6.71 KiB 0s\nWriting manifest to image destination\nStoring signatures\n\u001b[34mINFO: \u001b[0m Creating SIF file...\n\u001b[34mINFO: \u001b[0m Build complete: /build/image.sif\n\n",

"created_at": "2021-01-29T21:19:41.592Z",

"updated_at": "2021-01-29T21:24:53.141Z",

"built_at": "2021-01-29T21:24:53.130Z"

}

]

$ lcli image update --name "Node 10.23" \

--description "Custom image based on the official Node 10.23 Docker release" \

--url docker://node:10.23.1 test/npm_build

{

"path": "test/npm_build",

"name": "Node 10.23",

"description": "Custom image based on the official Node 10.23 Docker release",

"source_type": "docker_image",

"source_url": "docker://node:10.23.1",

"status": "created",

"build_output": "\u001b[33mWARNING:\u001b[0m Authentication token file not found : Only pulls of public images will succeed\n\u001b[34mINFO: \u001b[0m Starting build...\nGetting image source signatures\nCopying blob sha256:2587235a7635c6991dfee9791c7977ab29694cf73bc64c3c5a79097ca99364d1\n\r 0 B / 43.28 MiB \r 10.51 MiB / 43.28 MiB \r 17.19 MiB / 43.28 MiB \r 23.28 MiB / 43.28 MiB \r 33.27 MiB / 43.28 MiB \r 43.28 MiB / 43.28 MiB \r 43.28 MiB / 43.28 MiB 1s\nCopying blob sha256:953fe5c215cb5f929e0e42e5a1011f33edce9278a650faf10655e855a670f79f\n\r 0 B / 10.26 MiB \r 10.26 MiB / 10.26 MiB \r 10.26 MiB / 10.26 MiB 0s\nCopying blob sha256:d4d3f270c7deffd353181076af3b5746c8dbeac5abf454169a75e7822587bdab\n\r 0 B / 4.14 MiB \r 4.14 MiB / 4.14 MiB 0s\nCopying blob sha256:ed36dafe30e3d9c4fde74478dae686f851d7e93b719dc3165d8eb7e8be9305d9\n\r 0 B / 47.79 MiB \r 7.65 MiB / 47.79 MiB \r 17.01 MiB / 47.79 MiB \r 27.26 MiB / 47.79 MiB \r 42.51 MiB / 47.79 MiB \r 47.79 MiB / 47.79 MiB \r 47.79 MiB / 47.79 MiB 1s\nCopying blob sha256:00e912dd434d537c339ad16f37836cef5f5984fe0da0d0399fa79f47e31f1057\n\r 0 B / 204.38 MiB \r 11.14 MiB / 204.38 MiB \r 26.14 MiB / 204.38 MiB \r 41.12 MiB / 204.38 MiB \r 57.41 MiB / 204.38 MiB \r 64.89 MiB / 204.38 MiB \r 82.11 MiB / 204.38 MiB \r 97.83 MiB / 204.38 MiB \r 113.09 MiB / 204.38 MiB \r 122.62 MiB / 204.38 MiB \r 134.32 MiB / 204.38 MiB \r 147.36 MiB / 204.38 MiB \r 162.82 MiB / 204.38 MiB \r 178.25 MiB / 204.38 MiB \r 195.54 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB \r 204.38 MiB / 204.38 MiB 3s\nCopying blob sha256:dd25ee3ea38e0207708e76e6dcd112e86b43dfbff71976f17827b8188174922f\n\r 0 B / 4.07 KiB \r 4.07 KiB / 4.07 KiB 0s\nCopying blob sha256:2d11ef6090f142e5b5dd762481a98ee13e015e6ce2818e267fb7253ce186a04e\n\r 0 B / 32.19 MiB \r 14.34 MiB / 32.19 MiB \r 30.09 MiB / 32.19 MiB \r 32.19 MiB / 32.19 MiB 0s\nCopying blob sha256:da2a7c713c5d4b10626ac1de3e62c56df5d4ec07b0941ed2a9c510fd8bf6dd49\n\r 0 B / 2.27 MiB \r 2.27 MiB / 2.27 MiB 0s\nCopying blob sha256:2251290c38606882ab660b15e139ac5aed0b738ce9a055be2417417c12a9bd9a\n\r 0 B / 295 B \r 295 B / 295 B 0s\nCopying config sha256:6dd606a4bef80799405e433da62ac2c330e55ad71996ad6c8fc59554c60443ed\n\r 0 B / 6.71 KiB \r 6.71 KiB / 6.71 KiB 0s\nWriting manifest to image destination\nStoring signatures\n\u001b[34mINFO: \u001b[0m Creating SIF file...\n\u001b[34mINFO: \u001b[0m Build complete: /build/image.sif\n\n",

"created_at": "2021-01-29T21:19:41.592Z",

"updated_at": "2021-01-29T21:59:26.884Z",

"built_at": "2021-01-29T21:24:53.130Z"

}

$ lcli image rebuild test/npm_build

$ lcli image show test/npm_build

[

{

"path": "test/npm_build",

"name": "Node 10.23",

"description": "Custom image based on the official Node 10.23 Docker release",

"source_type": "docker_image",

"source_url": "docker://node:10.23.1",

"status": "building",

"created_at": "2021-01-29T21:19:41.592Z",

"updated_at": "2021-01-29T22:00:23.948Z",

"built_at": "2021-01-29T21:24:53.130Z"

}

]

Deleting a custom image

$ lcli image delete test/fortran_compiler

$ lcli image show test

[

{

"path": "test/npm_build",